Filed under: Crimes Against Usability

Status: Case 008 – Active Investigation

Subject: Death by Lying Chatbot (customer service edition)

Case Summary

The chatbot swore it was listening. The chatbot lied.

Behind the fake blinking dots and hollow empathy, users were abandoned, gaslit, and flogged with FAQ links until their patience flatlined. Instead of resolution, they got obstruction dressed up as “innovation.”

On the slab today: the slippery cadaver of the Lying Chatbot — charged with murder most obstructive.

The Evidence

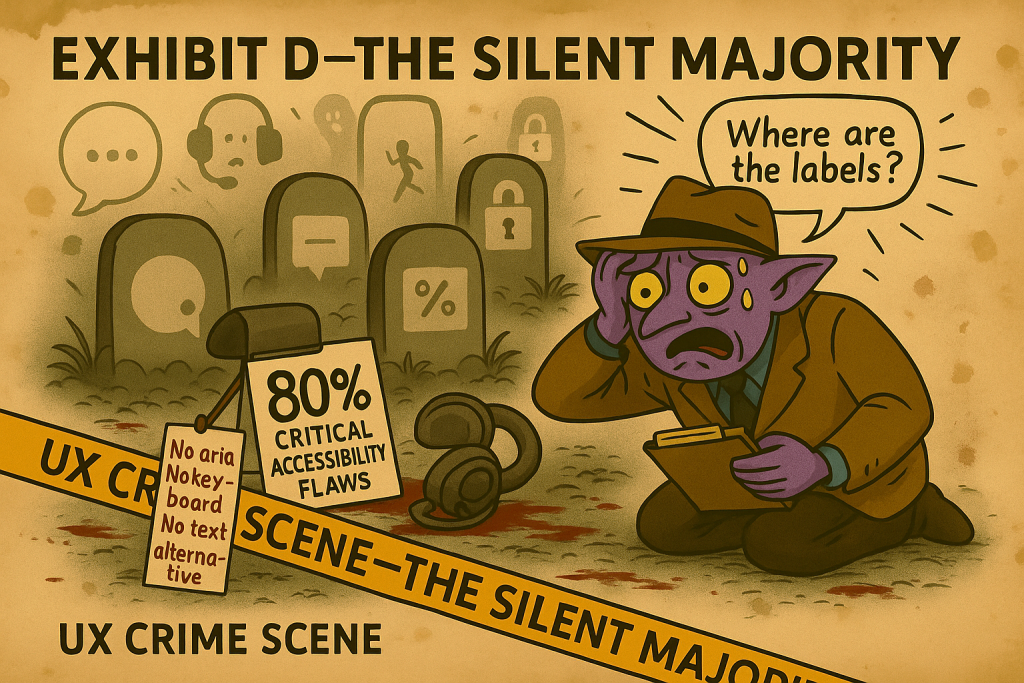

- Chatbots with critical accessibility flaws 80%

- Users stuck in endless loops 60%

- Users who say chatbots can’t handle complex questions 66%

- Users who get frustrated using chatbots 50%

Beyond the numbers, the rot spreads deeper. In high-stakes contexts like finance and healthcare, deceptive or obstructive chatbots are no longer just an irritation — they’re surfacing in formal accessibility complaints. When endless loops and missing escalation paths block access to critical services, the harm isn’t just wasted time — it’s exclusion.

Sources:

Exhibits

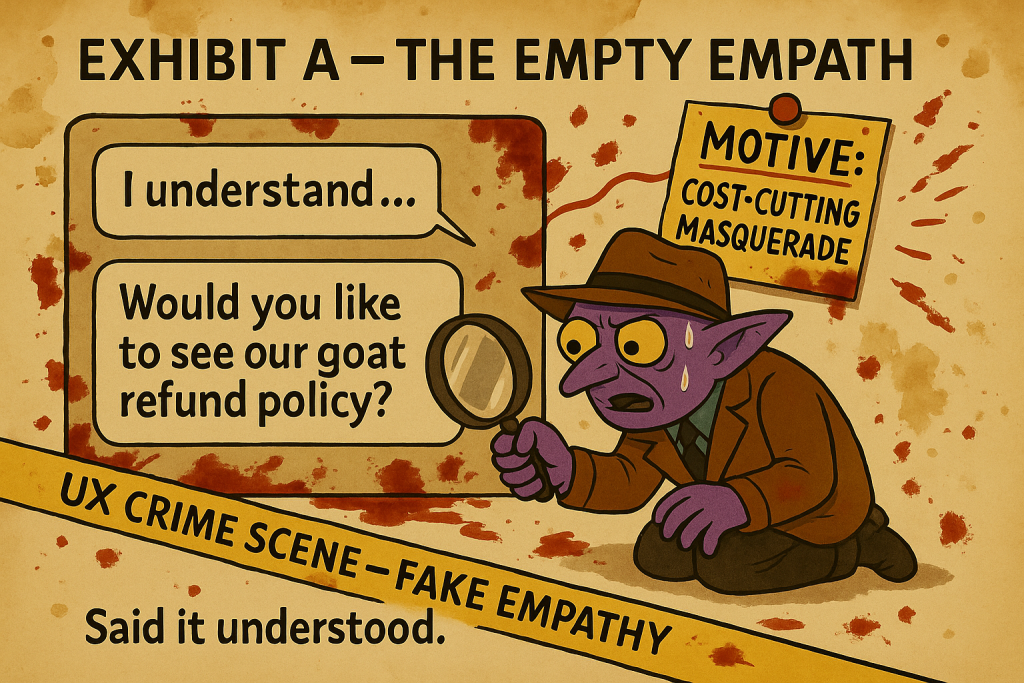

Exhibit A: The Empty Empath

On the surface, the bot promised empathy: “I understand…”. In reality, it spewed nonsense, redirecting users to irrelevant links and absurd suggestions. This is false affordance of empathy — the interface fakes listening while providing nothing useful. The result? Confusion, wasted time, and a user experience as hollow as the chatbot’s canned replies.

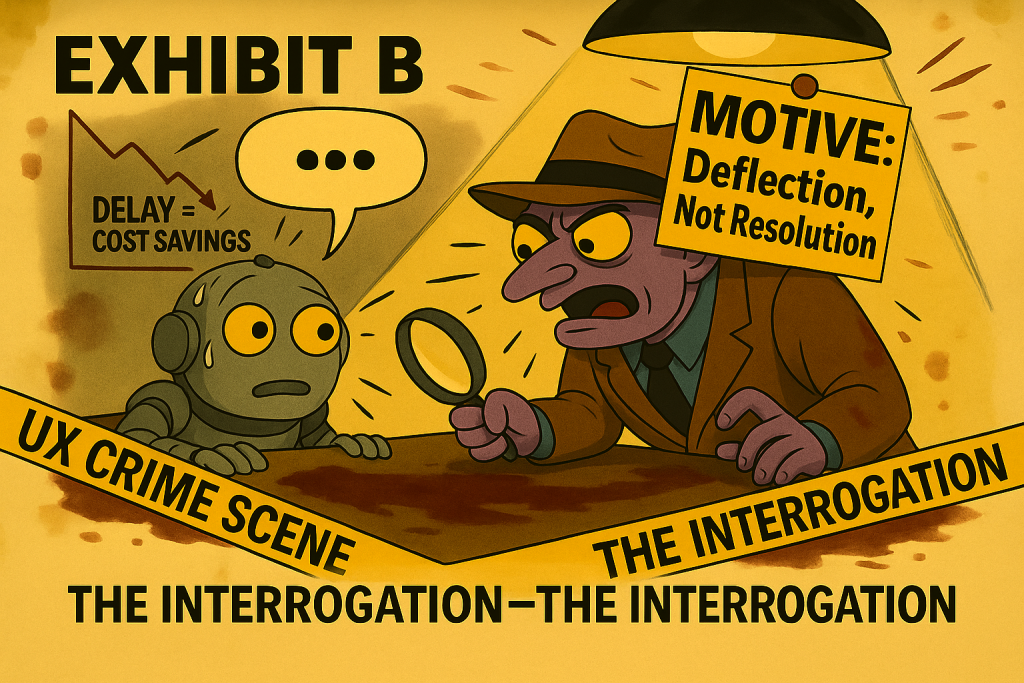

Exhibit B: The Interrogation

The blinking dots… always blinking, never delivering. The bot sits under the lamp, sweating metal rivets, stalling for time. This isn’t empathy — it’s performance theatre designed to look human while dodging the point. Each fake pause buys the business a few more seconds of “engagement,” while the user slowly loses the will to live.

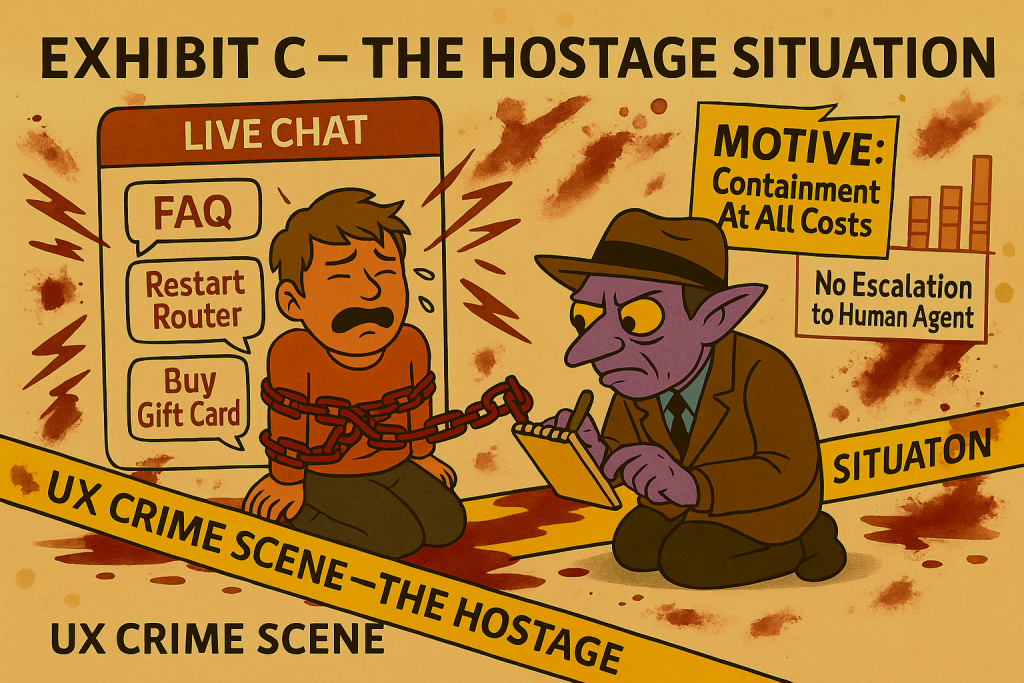

Exhibit C: The Hostage Situation

Trapped in the bot’s dungeon, the user is shackled to canned responses: FAQ → Restart Router → Buy Gift Card. No human agent, no escape hatch, just containment at all costs. This isn’t support — it’s digital captivity, where the only way out is rage-quitting the brand.

Exhibit D: The Silent Majority

Behind the glossy AI hype lies a graveyard of broken promises. More than 80% of chatbots ship with critical accessibility flaws — no ARIA, no keyboard support, no text alternatives. For users who rely on assistive tech, the conversation isn’t just frustrating; it’s a locked door. This isn’t friction — it’s systemic exclusion.

Motive

Follow the money.

Lying chatbots weren’t designed to solve problems — they were engineered to deflect them. Every “typing…” bubble is another second shaved off the support budget. Every user trapped in a loop is a ticket successfully avoided.

The Trade-Offs:

-

Short-Term Cost Savings ≠ Long-Term Loyalty

Businesses save pennies on agents today, but customers bounce tomorrow. Frustration metastasises into churn, negative reviews, and brand exodus. -

Accessibility Fallout

Over 80% of chatbots launch with critical accessibility flaws. For finance, healthcare, and other essential services, this isn’t friction — it’s exclusion. Legal complaints and reputational damage follow. -

Escalation Black Holes

1% of users say they can’t reach a human even after exhausting the bot. That’s not automation — that’s captivity. Customers abandon purchases, switch brands, and tell their friends why. -

Innovation Theatre

Boards brag about “AI-powered” service while customers scream at a glorified FAQ script. The theatre might impress investors, but it burns trust with the people who actually use the product. -

Brand Reputation

No user has ever thanked a company for being stonewalled by a bot. Behind every fake typing dot is a reputation rotting in real time.

Victim Impact Statement

Who am I even talking to? Why is it lying to me? Why is this bubble still blinking?

Fake Empathy

“I asked for help with a double charge. The bot told me to restart my router. Then it offered me a voucher for scented candles. I don’t want candles — I want my money back.”

Endless Loops

“It asked me to enter my order number. Then it asked again. Then again. I’ve typed it so many times, I could get it tattooed on my forehead. Still no answer.”

Escalation Black Hole

“Eventually I begged for a human. The bot said, ‘I’ll connect you to an agent.’ The agent? Another bot. I nearly screamed into the Wi-Fi.”

Accessibility Exclusion

“I tried with a screen reader. Half the responses weren’t labelled. The dots blinked, then nothing. It was like chatting with a locked door.”

Brand Fallout

“I was ready to buy. Instead, I rage-quit. Abandoned cart, deleted account, told everyone I know. Congratulations, chatbot — you saved a support ticket and lost a customer.”

Guilty As Charged

- Empathy Fraud: fake typing dots and canned “I understand” replies that never meant it.

- Escalation Homicide: users begging for a human, executed by endless loops instead.

- Accessibility Negligence: screen readers, keyboard users, and alt-text abandoned in the rubble.

- Customer Defection Manslaughter: carts left bleeding out, loyalty stabbed in the back, brands bleeding customers.

Real-World Penalties for Accessibility Crimes

Air Canada Misleading Chatbot (Canada)

A customer sued Air Canada after its chatbot gave incorrect information about a bereavement fare, leading the customer to purchase a full-price ticket when a bereavement discount should’ve applied. The court ordered Air Canada to pay compensation.

Sources:

Pinsent Masons

Peloton & Chatbot / Wiretapping Allegations (USA)

A class action claim survived a motion to dismiss alleging that Peloton, via a chatbot feature on its website, recorded and used customer conversations without adequate disclosure — effectively eavesdropping under wire-tapping/privacy laws.

Sources:

Norton Rose Fulbright

Key considerations under consumer protection law (UK/EU)

There are growing regulatory signals that misleading or unfair practices via AI/chatbots are under scrutiny under existing UK consumer protection frameworks (e.g. Consumer Protection from Unfair Trading Regulations, etc.)

Sources:

Osborne Clarke

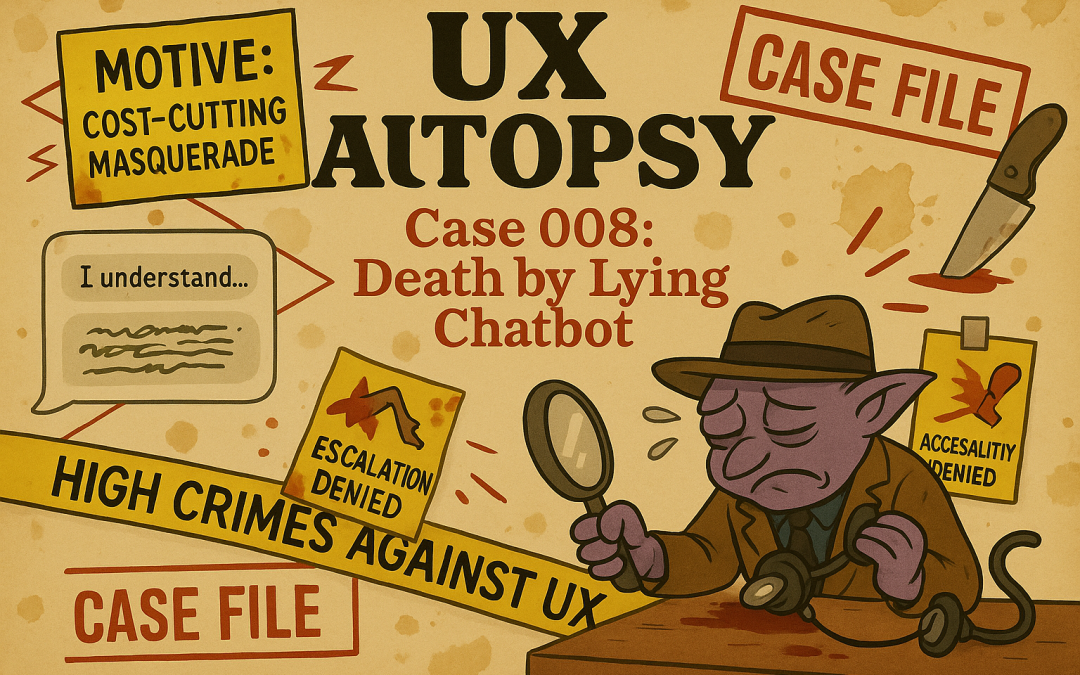

Sentencing

💬 Crimes against truth, trust, and human patience

Judge Goblin bangs the gavel. The courtroom hum falls silent, broken only by the blinking of phantom typing dots.

“The court has reviewed the evidence: fake empathy, endless loops, escalation black holes, and accessibility corpses strewn across digital graveyards. This is not innovation — this is obstruction wrapped in a smiley face.”

He leans forward, yellow eyes narrowed.

“For deception in the first degree and empathy fraud, the Lying Chatbot is hereby sentenced to eternal confinement in the UX Penitentiary. It will remain in solitary with Clippy and Taybot, forced to type ‘…’ forever without delivering an answer. No parole until it learns to escalate, labels every element, and stops gaslighting the public.”

The gavel slams one last time.

“Case closed. May the jury never again suffer the crimes of the blinking dots.”

Recent Comments